Dating with Python 💝

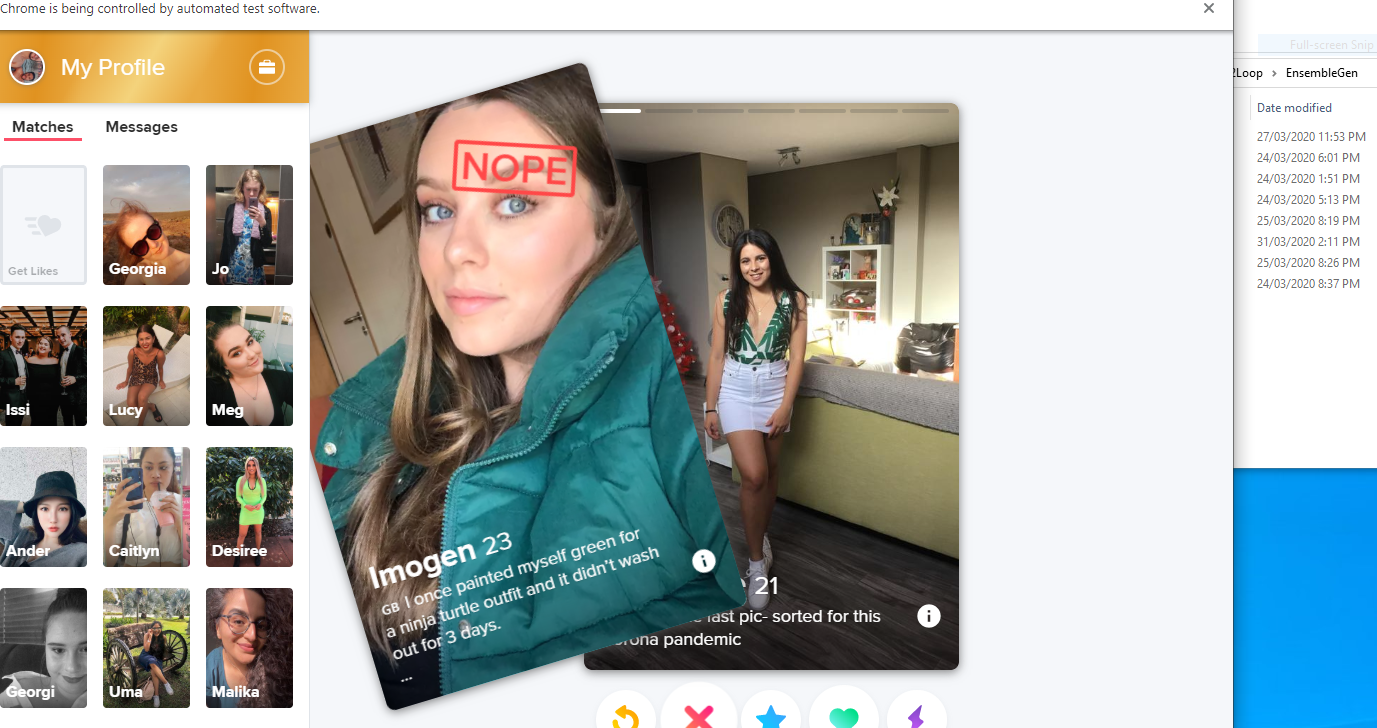

Automating dating apps with selenium and encoding your attraction preferences with machine learning.

On average, dating app users spend 90 minutes a day swiping. For the non-chad, the likelihood this effort amounts to anything is slim.

We can automate away this depression and optimise emotional investment with a bot to perform the swiping and a machine learning model to decide which way. Then, set your notifications to alert on matches and new messages.

Setup

Selenium is an automation tool that simulates interacting with a browser, and scikit-learn is a popular machine learning library.

- Download the chromedriver version that corresponds to your installed Chrome version, and put it somewhere in the PATH

- Install Python >= 3.6, selenium (undetected_chromedriver), requests, pillow, NumPy, pandas and scikit-learn

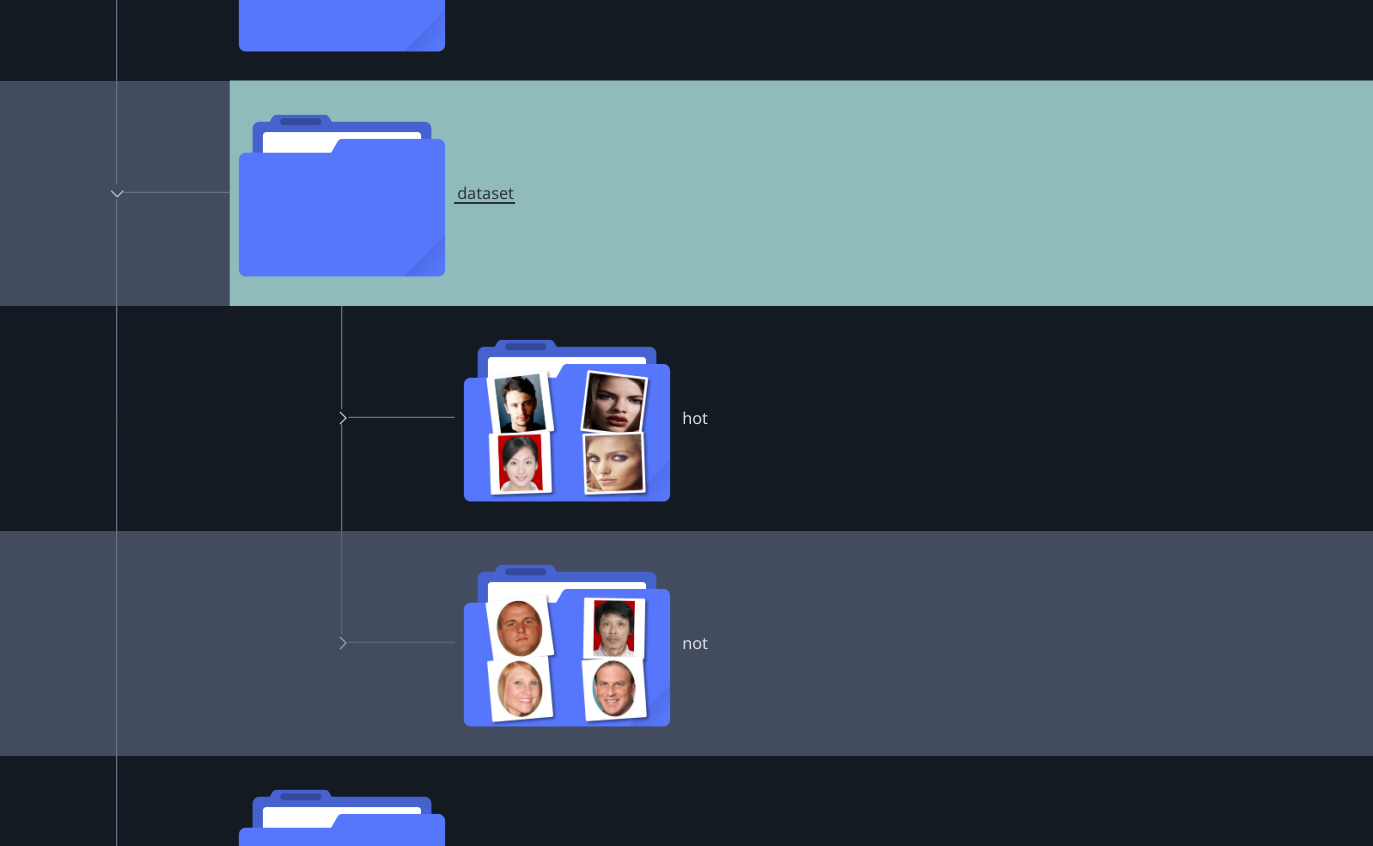

- I highly recommend you build a dataset. I used a selection from Liang et al. and Kaggle. The bot will continue to scrape and add images accordingly

The Swiping

We'll start here because some of you horn dogs 'swipe right' indiscriminately, and the ML section wouldn't even be applicable.

Selenium works by isolating page elements via their HTML properties (tags, classes and ids) or XPaths and sending user actions like clicks, scrolls or text input.

# Clicks big blue button

webdriver.find_element_by_id("bigBlueButton").click()

# Gets a referential list of all buttons

all_buttons = webdriver.find_elements_by_tag("button")

For those itching to ctrl-c, the code is below. I'm using Tinder, but the steps are similar across apps: initialise the chrome driver and navigate to the site, input credentials and login, and identify like and dislike button Xpaths.

import os

from build_dataset import img_to_feature_vec

import uuid

import shutil

import re

import undetected_chromedriver.v2 as uc

from selenium.webdriver.chrome.options import Options

from selenium.webdriver.common.desired_capabilities import DesiredCapabilities

from selenium.webdriver.common.by import By

from selenium import webdriver

import time

import random

class Bot():

def __init__(self):

capabilities = DesiredCapabilities().CHROME

chrome_options = uc.ChromeOptions()

chrome_options.add_argument("--disable-extensions")

chrome_options.add_argument("--lang=en-GB")

prefs = {

'profile.default_content_setting_values':

{

'notifications': 0,

'geolocation': 1

},

'profile.managed_default_content_settings':

{

'geolocation': 1

},

}

chrome_options.add_experimental_option('prefs', prefs)

capabilities.update(chrome_options.to_capabilities())

self.driver = uc.Chrome(options=chrome_options)

def login(self, email, password):

self.driver.get('https://tinder.com/')

time.sleep(3)

# Store unique session id

self.session_id = self.driver.find_elements(

By.TAG_NAME, 'div')[0].get_attribute('id')

# print(self.session_id)

try:

# Decline trackers and cookies

self.driver.find_element(

By.XPATH, f'//*[@id="{self.session_id}"]/div/div[2]/div/div/div[1]/div[1]/button').click()

except Exception as e:

print('No Cookie prompt found... Trying again.')

time.sleep(2)

self.driver.find_element(

By.XPATH, f'//*[@id="{self.session_id}"]/div/div[2]/div/div/div[1]/div[1]/button').click()

time.sleep(2)

self.login_button = None

self.google_login_button = None

try:

self.login_button = self.driver.find_element(By.XPATH,

f'//*[@id="{self.session_id}"]/div/div[1]/div/div/main/div/div[2]/div/div[3]/div/div/button[2]')

self.login_button.click()

time.sleep(2)

self.google_login_button = self.driver.find_element(By.CSS_SELECTOR,

'[aria-label="Log in with Google"]')

self.google_login_button.click()

except Exception as e:

print("Couldn't open login portal... Trying again")

time.sleep(2)

self.login_button = self.driver.find_element(By.XPATH,

f'//*[@id="{self.session_id}"]/div/div[1]/div/div/main/div/div[2]/div/div[3]/div/div/button[2]')

self.login_button.click()

time.sleep(2)

self.google_login_button = self.driver.find_element(By.CSS_SELECTOR,

'[aria-label="Log in with Google"]')

time.sleep(2)

try:

# Switch to pop up login window

window_before = self.driver.window_handles[0]

window_after = self.driver.window_handles[1]

self.driver.switch_to.window(window_after)

self.driver.find_element(By.XPATH,

'//*[@id="identifierId"]').send_keys(email)

self.driver.find_element(By.XPATH,

'//*[@id="identifierNext"]/div/button').click()

time.sleep(2)

self.driver.find_element(By.XPATH,

'//*[@id="password"]/div[1]/div/div[1]/input').send_keys(password)

self.driver.find_element(By.XPATH,

'//*[@id="passwordNext"]/div/button').click()

wait = input('Log in, and press enter to continue...')

self.driver.switch_to.window(window_before)

except Exception as e:

print("ERROR:\t Couldn't login with credentials.")

def run(self):

pass

# Allow location services

try:

self.driver.find_element(

By.CSS_SELECTOR, '[aria-label="Allow"]').click()

except Exception as e:

print("ERROR:\t Couldn't allow location services.")

time.sleep(1)

# Disable notifications

try:

self.driver.find_element(

By.CSS_SELECTOR, '[aria-label="Not interested"]').click()

except Exception as e:

print("ERROR:\t Couldn't disable notifications.")

time.sleep(6)

self.like = None

self.dislike = None

# Define swipe buttons

try:

self.like = self.driver.find_element(By.XPATH,

f'//*[@id="{self.session_id}"]/div/div[1]/div/div/main/div/div/div/div/div[4]/div/div[4]/button')

self.dislike = self.driver.find_element(By.XPATH,

f'//*[@id="{self.session_id}"]/div/div[1]/div/div/main/div/div/div/div/div[4]/div/div[2]/button')

except Exception as e:

print("ERROR:\t Couldn't assign like or dislike buttons. Trying again ... ")

self.like = self.driver.find_element(By.XPATH,

f'//*[@id="{self.session_id}"]/div/div[1]/div/div/main/div/div/div/div/div[4]/div/div[4]/button')

self.dislike = self.driver.find_element(By.XPATH,

f'//*[@id="{self.session_id}"]/div/div[1]/div/div/main/div/div/div/div/div[4]/div/div[2]/button')

while True:

try:

self.like.click()

time.sleep(random.randrange(3, 30) * .1)

except Exception as e:

wait = input(

"ERROR:\t Couldn't swipe... Press enter to resume ")

email = 'blah@whatever'

password = 'blah'

bot = Bot()

bot.login(email, password)

bot.run()

The Deciding

Now for the cool, if a little uncomfortable, part.

The idea is to turn an image of a person into a descriptive list of numbers, called a feature set. The training data is a bunch of feature sets labelled with a 1 (for yeah) or 0 (for nah). A model learns unobvious trends in this data and predicts 0 or 1 when fed an unseen feature set.

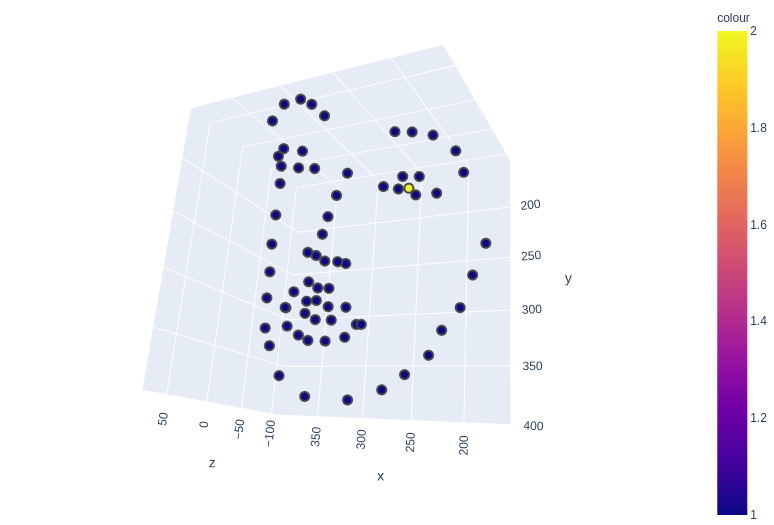

We can use deepface and face-alignment to extract demographics and 3D landmark information.

Then calculate distance ratios related to facial attractiveness (Schmid et al.) to construct the final feature vector and address any landmark scaling issues.

return [

obj["age"],

1 if obj["gender"] == "Woman" else 0,

face_width / middle_third,

nose_chin / nose_lips,

nose_chin / pupil_nose,

nose_width / nose_lips,

lip_height / nose_width,

1 if label == 'hot' and obj["gender"] == "Woman" else 0

]

Throwing the dataset at popular classification algorithms should yield something like the following.

---------------------- SVC -----------------------

Cross Validation Score: 0.933142857142857

Polynomial Degree 2

Confusion:

[[150 25]

[ 0 175]]

Accuracy: 0.9285714285714286

Cross Validation Score: 0.9342857142857144

Polynomial Degree 3

Confusion:

[[153 22]

[ 9 166]]

Accuracy: 0.9114285714285715

Cross Validation Score: 0.9308571428571429

Polynomial Degree 4

Confusion:

[[153 22]

[ 6 169]]

Accuracy: 0.92

Cross Validation Score: 0.933142857142857

Kernel: linear

Confusion:

[[150 25]

[ 0 175]]

Accuracy: 0.9285714285714286

Cross Validation Score: 0.9297142857142857

Kernel: rbf

Confusion:

[[150 25]

[ 1 174]]

Accuracy: 0.9257142857142857

Cross Validation Score: 0.5

Kernel: sigmoid

Confusion:

[[ 0 175]

[ 0 175]]

Accuracy: 0.5

----------------- Random Forest ------------------

Cross Validation Score: 0.9205714285714286

N_estimators: 10

Confusion:

[[159 16]

[ 15 160]]

Accuracy: 0.9114285714285715

Cross Validation Score: 0.9279999999999999

N_estimators: 50

Confusion:

[[155 20]

[ 8 167]]

Accuracy: 0.92

Cross Validation Score: 0.9285714285714286

N_estimators: 100

Confusion:

[[156 19]

[ 5 170]]

Accuracy: 0.9314285714285714

Cross Validation Score: 0.9279999999999999

N_estimators: 200

Confusion:

[[153 22]

[ 5 170]]

Accuracy: 0.9228571428571428

Cross Validation Score: 0.9274285714285714

N_estimators: 500

Confusion:

[[153 22]

[ 5 170]]

Accuracy: 0.9228571428571428

----------------- Decision Tree ------------------

Cross Validation Score: 0.933142857142857

Max_depth: 2

Confusion:

[[150 25]

[ 0 175]]

Accuracy: 0.9285714285714286

Cross Validation Score: 0.9217142857142857

Max_depth: 5

Confusion:

[[154 21]

[ 7 168]]

Accuracy: 0.92

Cross Validation Score: 0.9062857142857143

Max_depth: 10

Confusion:

[[158 17]

[ 11 164]]

Accuracy: 0.92

Cross Validation Score: 0.8908571428571429

Max_depth: 20

Confusion:

[[160 15]

[ 16 159]]

Accuracy: 0.9114285714285715

Cross Validation Score: 0.8931428571428572

Max_depth: 50

Confusion:

[[160 15]

[ 11 164]]

Accuracy: 0.9257142857142857

Cross Validation Score: 0.8954285714285714

Max_depth: 100

Confusion:

[[159 16]

[ 15 160]]

Accuracy: 0.9114285714285715

-------------- Logistic Regression ---------------

Cross Validation Score: 0.9348571428571428

Confusion:

[[151 24]

[ 2 173]]

Accuracy: 0.9257142857142857

---------------------- KNN -----------------------

Cross Validation Score: 0.9171428571428573

Confusion:

[[153 22]

[ 5 170]]

Accuracy: 0.9228571428571428

------------------ Naive Bayes -------------------

Cross Validation Score: 0.933142857142857

Confusion:

[[150 25]

[ 0 175]]

Accuracy: 0.9285714285714286

Logistic Regression slightly and consistently outperformed other models for me. Pickle whichever works best for you, then integrate prediction into the bot.

pickle.dump(clf, open(os.path.join(

'./models/', 'lr.sav'), 'wb'))

clf = pickle.load(open('./models/lr.sav', 'rb'))

while True:

try:

time.sleep(4)

self.curr_img = self.driver.find_element(

By.XPATH, f'//*[@id="{self.session_id}"]/div/div[1]/div/div/main/div/div/div/div/div[3]/div[1]/div[1]/span[1]/div')

attr_str = self.curr_img.get_attribute('style')

# Use regex to extract background image url

url = re.findall(r'url\((.*?)\)', attr_str)[0][1:-1]

# print(url)

print('Downloading image... ', end='')

download_image(url)

time.sleep(2)

print('OK')

test = img_to_feature_vec('test.jpg', 'hot')

if test is not None:

test = test[:-1]

result = clf.predict([test])[0]

if result == 1:

self.like.click()

move_image(

'test.jpg', f'./dataset/hot/{uuid.uuid4()}.jpg')

else:

self.dislike.click()

move_image(

'test.jpg', f'./dataset/not/{uuid.uuid4()}.jpg')

continue

self.dislike.click()

time.sleep(random.randrange(3, 30) * .1)

except Exception as e:

wait = input(

"ERROR:\t Couldn't swipe... Press enter to resume ")

if os.path.exists('./test.jpg'):

os.remove('./test.jpg')

And that's it, good luck, sadboi. Some considerations, though.

- Almost definitely don't tell your date about this

- Automating stuff is against Tinder's usage agreement. Proceed with caution or don't proceed

- You may want to extend this further with deep learning or PCA to identify redundant features

- For this to be autonomous, you'd have to disable two-factor auth, catch various pop-ups and run selenium headless -- I'd recommend keeping an eye on it to manage complications

![[Devlog 2] Notes that think and link](/content/images/size/w600/2024/11/nabu-banner.png)

![[Devlog 1] Why notes suck](/content/images/size/w600/2024/06/nabu-banner-1.png)